Cultura y noticias hispanas del Valle del Hudson

Usos y Costumbres

AI Bias

Por Nohan Meza

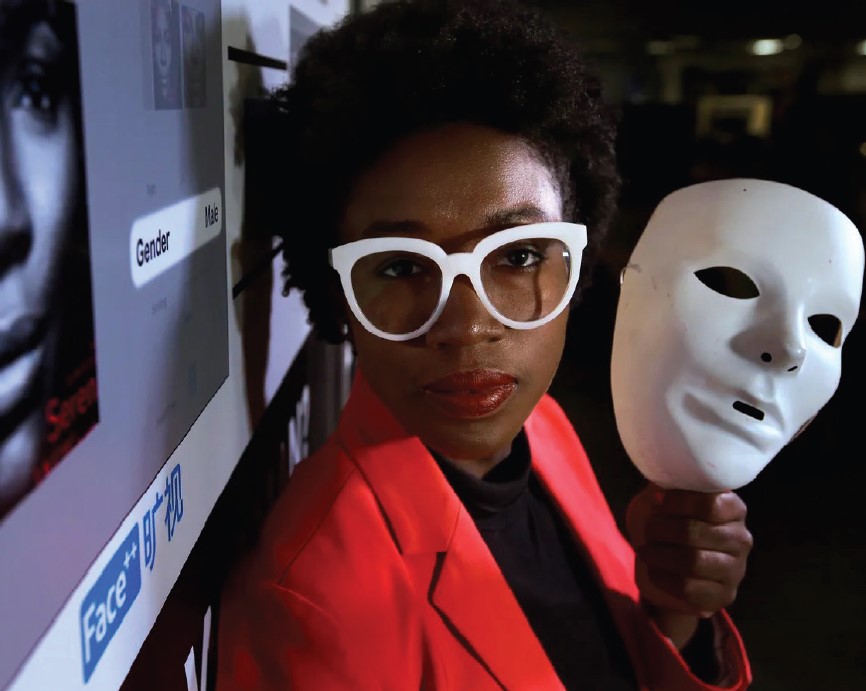

December 2021 In recent years, humans have been approaching what is called the “AI age” because of technological advances related to artificial intelligence, particularly the ubiquitous use of facial recognition. This is the time when we must ask ourselves who creates this technology, what its prejudices are, and what actions are available to us to ensure an inclusive technological future.

“Technology should work for all of us, not just some.” These are the words of Joy Buolamwini, executive director of the Algorithm Justice League (AJL) regarding the use of AI and facial recognition. While artificial intelligence systems cover many of our interactions with the world, from the self-correcting on our phones to the facial identification systems used in customs and immigration centers, many do not know how these technologies work and the risks that exist during the process of their creation.

Artificial intelligence is the technology in which computer programs perform operations comparable to the human mind. In this category one of the areas with the greatest growth in its use is facial recognition. According to a Georgetown Law study, one in two adults in the United States is in a police facial recognition database. The risk is that while the technology does not operate on the basis of prejudices, those who create the code and then those who use it are capable of doing so.

Who sees whom?

Studies by the AI Institute show that the root of the problem lies in the fact that the industry is dominated by white men, with a low level of diversity and little training dedicated to considering racial or gender differences in creating intelligent systems. Because of the extensive process that involves coding new programs, the community usually shares their codes on open-source pages such as Github. These prejudices and misrepresentation can proliferate across the industry quickly and globally.

Then, a computer can be taught to recognize faces, but if only a database with low diversity is used, whole systems are created that do not recognize the wide range of social diversity and affect our day-to-day. These intelligent computers and systems ‘learn’ to see a white world. Today, AI or facial recognition systems are used in housing, banking, cell phone applications, etc. It could be something as simple as having trouble trying to enter your apartment building, or as serious as being charged with a crime of which one is not guilty. The results would disproportionately affect black and Hispanic people.

And the risks do not only exist in the United States: In Argentina, Brazil, and Uruguay, laws have been passed that allow the government to use invasive facial recognition systems for the purported purpose of maintaining public safety.

Computing change

Is all this the Orwellian society to which humanity is headed? Maybe. But that doesn't mean every person doesn't have the role of creating a better future.

The AJL proposes several resources to audit current AI systems and to share personal testimonies. But beyond activism, change begins with awareness, analyzing our technology: Who codes matters, how we code matters, and why our code matters.

Also, in the longer term, it is essential to diversify the AI industry, to create opportunities that include a higher level of different types of people in the industry. Only in this way can intelligent systems be created that think like all races and ethnicities of our planet. In the words of Joy Buolamwini, director and founder of the AJL, “We have used computer tools to unlock an immense abundance. Now we can create even more equity by putting social change at the center of our technological advancement rather than at the secondary level.”

Resources

The Algorithmic Justice League (AJL)

What is Equitable AI

Taking Action

Documentary ‘Coded Bias’ available on Netflix

COPYRIGHT 2021

La Voz, Cultura y noticias hispanas del Valle de Hudson

Artificial intelligence is the technology in which computer programs perform operations comparable to the human mind. In this category one of the areas with the greatest growth in its use is facial recognition. According to a Georgetown Law study, one in two adults in the United States is in a police facial recognition database. The risk is that while the technology does not operate on the basis of prejudices, those who create the code and then those who use it are capable of doing so.

Who sees whom?

Studies by the AI Institute show that the root of the problem lies in the fact that the industry is dominated by white men, with a low level of diversity and little training dedicated to considering racial or gender differences in creating intelligent systems. Because of the extensive process that involves coding new programs, the community usually shares their codes on open-source pages such as Github. These prejudices and misrepresentation can proliferate across the industry quickly and globally.

Then, a computer can be taught to recognize faces, but if only a database with low diversity is used, whole systems are created that do not recognize the wide range of social diversity and affect our day-to-day. These intelligent computers and systems ‘learn’ to see a white world. Today, AI or facial recognition systems are used in housing, banking, cell phone applications, etc. It could be something as simple as having trouble trying to enter your apartment building, or as serious as being charged with a crime of which one is not guilty. The results would disproportionately affect black and Hispanic people.

And the risks do not only exist in the United States: In Argentina, Brazil, and Uruguay, laws have been passed that allow the government to use invasive facial recognition systems for the purported purpose of maintaining public safety.

Computing change

Is all this the Orwellian society to which humanity is headed? Maybe. But that doesn't mean every person doesn't have the role of creating a better future.

The AJL proposes several resources to audit current AI systems and to share personal testimonies. But beyond activism, change begins with awareness, analyzing our technology: Who codes matters, how we code matters, and why our code matters.

Also, in the longer term, it is essential to diversify the AI industry, to create opportunities that include a higher level of different types of people in the industry. Only in this way can intelligent systems be created that think like all races and ethnicities of our planet. In the words of Joy Buolamwini, director and founder of the AJL, “We have used computer tools to unlock an immense abundance. Now we can create even more equity by putting social change at the center of our technological advancement rather than at the secondary level.”

Resources

The Algorithmic Justice League (AJL)

What is Equitable AI

Taking Action

Documentary ‘Coded Bias’ available on Netflix

COPYRIGHT 2021

La Voz, Cultura y noticias hispanas del Valle de Hudson

Comments | |

| Sorry, there are no comments at this time. |